Artificial intelligence is becoming a go-to-solution for enterprises looking to save costs, improve efficiency, ensure resource safety, avoid cyber attacks, and several other favorable outcomes. However, AI implementation has reached the level where businesses need to question whether or not the way they are designing, building, and deploying AI solutions is being done in a trustworthy manner for the workforce, customers, and society. Responsible AI is now the approach that many enterprises are taking to ensure trustworthiness in their AI projects.

Understanding Responsible AI

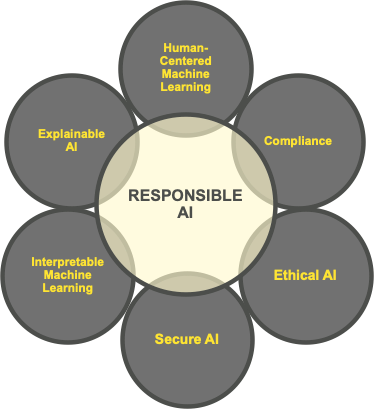

Responsible AI is critical for any company deploying AI/ML to augment business processes. Understanding the entire scope of risks of deploying AI solutions is imperative for success. Knowing how and when to trust your AI decreases risk. The growing field of Responsible AI is a push toward Artificial Intelligence practices aimed at trust, understanding, risk mitigation, and corporate social responsibility.

H2O.ai is unique in its Responsible AI offerings, and our Driverless AI is ideal to explain this. Driverless AI provides a robust explainability toolkit with a human-centered focus; automatic interpretability; an ethical and fair toolkit, and a wealth of compliance resources. Driverless AI offers the most robust analytical suite to support Explainable AI and Machine Learning interpretability. H2O.ai’s commitment to Responsible AI is in alignment with the company’s core mission of democratizing AI and making AI more accessible.

Elements of Responsible AI

- Explainable AI: Focuses on the ability to analyze an ML model after it has been developed.

- Interpretable Machine Learning: Transparent model architectures and increasing how intuitive and understandable ML models can be.

- Ethical AI: Sociological fairness in machine learning predictions, i.e., whether one category of person is being weighted unequally.

- Secure AI: Debugging and deploying ML models with similar counter-measures against insider and cyber threats as would be seen in traditional software.

- Human-Centered AI and ML: User interactions with AI and ML systems.

- Compliance: Following compliance strictures is a vital part of Responsible AI. Companies must ensure that AI systems are aligned to regulatory norms of GDPR, CCPA, FCRA, ECOA, FTC, CFPB, OMB, and others.

Major Features in H2O.ai Responsible AI Toolkit

- Disparate Impact Analysis: Answers the question – does the AI have a disproportionate effect on a specific group or class? (Lending is a common business case).

- Shapley Values: Defines the importance of a single variable to a specific model versus the importance of that variable at the global level.

- Sensitivity / What-if Analysis: Shows how sensitive predictions were to the decision thresholds and allows for complete flexibility to adjust single or entire attributes to see what might have caused an individual prediction to change in outcome.

- K-LIME: Makes minor adjustments to the data sample to see how it impacts predictions. Data scientists use it to understand how sensitive their model is to changes in the data set or sample.

- Surrogate Decision Tree: Shows what the most common combination of features is based on best predictors. For instance, decision trees visually displays aspects like how likely a certain person would be to live or die in a crisis based on age and sex.

- Partial Dependence Plot: Shows how much impact to the prediction a single variable has.

Why Responsible AI Matters

The severity of repercussions from not doing AI/ML responsibly varies.

Companies also run the risk of non-compliance with regulations. Major failures include the Apple Card being investigated after gender discrimination complaints; Amazon’s hiring tool discriminating against women; Microsoft’s AI Twitter bot making racist, sexist tweets, technical debt incurred when regulations need to change and massive, expensive rework is required, and more.Irresponsible AI can lead to everything from solving a business problem incorrectly to the erosion of public trust and even ethical issues around race, gender, or other forms of bias that have real impacts on peoples’ lives.

Why Issues Occur

Whenever algorithms are used to make decisions, there are chances it can go wrong. Key considerations to avoid failures in deploying AI models are ensuring they don’t have human bias or ignore the data drift.

Main factors causing AI not to be applied responsibly include:

- Speed to market/speed to production;

- Neglect and lack of oversight;

- Indexing on accuracy at all costs;

- The need to remove data out of the set.

Each company is unique and needs to take a nuanced approach based on their use case. H2O.ai provides the framework, but every company needs to apply Responsible AI practices differently.

H2O.ai’s Competitive Advantage in Responsible AI:

H2O.ai has technologies that support Responsible AI and are based on the most up-to-date standards built with a robust, flexible toolkit in the Responsible AI space. Customers can tinker with it, get under the hood of the model, and see what might have moved the needle to affect how a score or decision was obtained. While H2O.ai does not provide consulting services around Responsible AI, the company aims to drive AI trust and adoption.

Case Study: How Responsible AI Improves Business Relationships

An Accenture study on Responsible AI states that 45% respondents agreed not enough is understood about the unintended consequences of AI. Although many business leaders have not yet committed to Responsible AI and driving human-centric AI programs, others are taking the lead. H2O.ai worked with Jewelers Mutual to improve their customers’ trust in them.

Jewelers Mutual, provider of comprehensive insurance to both jewelers and consumers, uses H2O-3 open source and H2O Driverless AI to optimize coverage for their clients’ assets. The H2O.ai platforms build AI models that work out rating systems based on customer data, making Jewelers Mutual insurance rates competitive. Earlier investments in analytics, AI, and machine learning relied on data from losses, customers, and multiple other sources. The company had also implemented Gradient Boosting Machine, as well as an AutoML solution from DataRobot. However, they needed greater transparency as well as an explainable AI component.

H2O.ai’s Driverless AI offered the right level of transparency, and had the advanced capability to explain and understand the company’s models, with machine learning interpretability. This helped improve insurance rates, and thereby improve the customer experience due to the transparency and trust in the products. In the future, Jewelers Mutual plans to implement H2O.ai’s Driverless AI to determine the customer LTV (lifetime value), customer 360 view and customer journeys.

Conclusion

Implementing Responsible AI is more than a technology requirement. It needs to be seen holistically in terms of people, processes, and technologies. Achieving the highest levels of explainability for AI models and reassessing AI/ML deployments periodically will be critical for firms looking to deploy AI responsibly.